A few weeks ago I wrote an article explaining why the angle of view is more helpful than the focal length when comparing lenses of different camera systems. I also pointed out why there is the need for a reference and why the 35mm format (36x24mm, also called “full frame”) is the one we tend to use. If you haven’t read it, I invite you to check it out!

This time I want to talk about another topic that often causes confusion and triggers fierce discussions when comparing camera systems: aperture equivalence.

I admit that it took me some time to write this post. At first, equivalence might appear easy to explain. However if we start to scratch beneath the surface, we discover that there are many more technical aspects to take into account.

The reason I was inspired to write this article is because I often encounter comparisons such “an Olympus 300mm f/4 “in reality is” a 600mm f/8 or a Fuji 56mm f/1.2 “in reality is” a 85mm f/1.8″ online.

Well let’s talk about this “reality.”

[toc heading_levels=”3″]

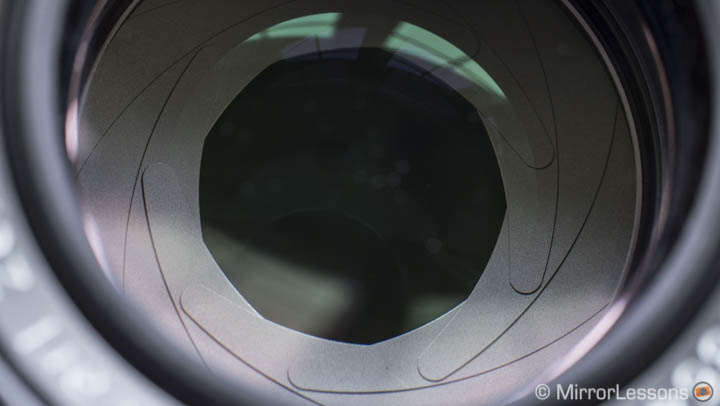

When it comes to choosing a lens, photographers usually check two specifications first: the focal length and the fastest aperture. I am pretty sure that many of you know what the aperture is and what it does but let’s just summarise for the sake of this post. The aperture is basically a hole inside the lens whose diameter can be narrowed or enlarged (thanks to the diaphragm iris mechanism) depending on the f-stop value you choose. A fast aperture (for example f/1.4) will produce a larger hole while a slower aperture (f/11) will have a smaller hole.

This aperture affects two things: the amount of light that hits the sensor when you take the picture and the depth of field of your image. Take note of the first point (the amount of light) and save it somewhere because I want to talk about depth of field first. Why? Because this is usually what matters to people when it comes to comparing camera systems.

Aperture and Depth of Field

Depth of field refers to the distance between the nearest and farthest points from the focal plane that appear in focus. In other words, it is the area that appears sharp (in focus) in the photograph. A fast aperture like f/1.4 will produce a shallow depth of field so that distance will be shorter and your image will have more out-of-focus areas. A smaller aperture like f/11 will produce a deeper depth of field so the distance will be longer and more areas will be in focus.

Depth of field matters because it can play an important role in the look/style you want to achieve. It’s not just a technical aspect but also an artistic/creative element.

Shallow depth of field is popular for portraits, among other things.

Photographers are interested in knowing how sharp a lens is wide open, how beautiful the bokeh (quality of the out-of-focus area) can be and how capable the lens is at isolating a subject. They are also interested in the overall look and shallow depth of field a fast lens can deliver. Therefore it has become common practice to compare depth of field between lenses designed for different sensor sizes to get a clearer picture of how your image will look when taken with a smaller sensor. The easiest way to do this is to compare equivalent f-numbers.

The question is: is it correct to compare lens apertures between camera systems by considering just the depth of field? Let’s look into this a little more.

Let’s get out of the way what has already been explained many times. We have three lenses with different focal lengths but almost identical angles of view on their respective formats:

- 50mm f/1.4 on 36×24

- 35mm f/1.4 on APS-C

- 25mm f/1.4 on m4/3

First let’s look at the aperture. In the introduction, I referred to it as a hole inside of our lens. To be more precise, aperture refers to three distinctive terms:

- The Physical aperture a.k.a. the iris mechanism inside the lens

- The Virtual aperture (also called entrance pupil): the optical image of the physical aperture seen through the front element of the lens

- The Relative aperture (f-ratio or f-stop): the quotient of the focal length and the virtual aperture.

The virtual aperture is what interests us here because it is used to calibrate the opening and closing of the diaphragm aperture. We can calculate the virtual aperture with the following formula: Focal length/f stop = virtual aperture. So for the three lenses mentioned above, it would work this way:

- 36×24 format: 50/1.4 = 35.7mm

- APS-C format: 35/1.4 = 25mm

- m4/3 format: 25/1.4 = 17.9mm

The 50mm lens has a larger aperture for the same f-stop number and that makes sense. The virtual aperture depends on the focal length, and the focal length for the 36×24 format is longer than the other two lenses because it has to cover the same angle of view on a larger sensor. What if I do the math a second time to match the same virtual aperture of the m4/3 lens?

- 36×24 format: 50/17.9= 2.8

- APS-C format: 35/17.9 = 2.0

- m4/3 format: 25/17.9 = 1.4

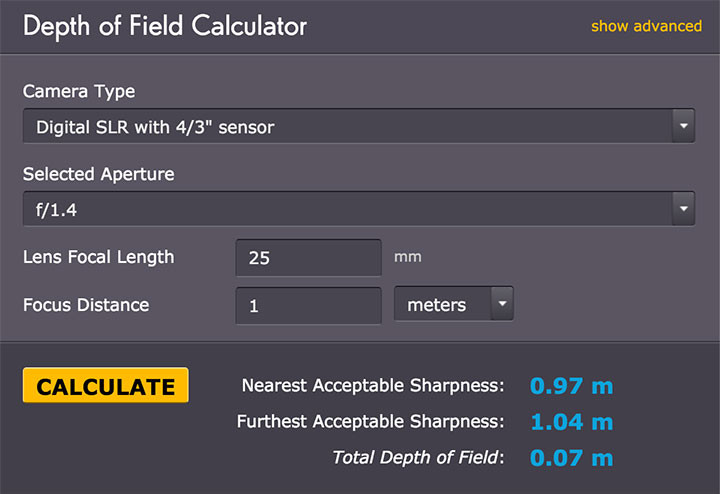

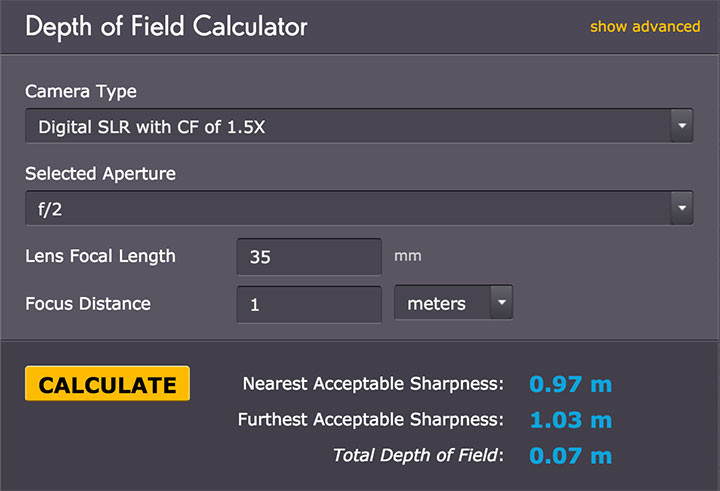

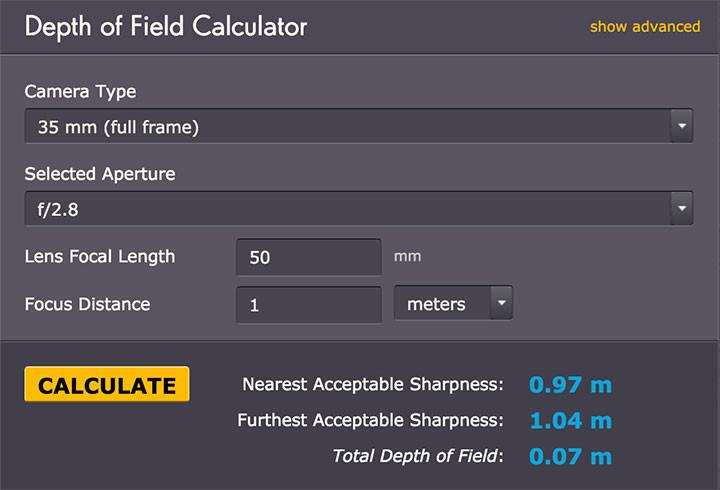

This second calculation tells us that if you take a picture at the same distance with the 25mm at f/1.4 on the m4/3 camera, that aperture corresponds to f/2 on the 35mm and f/2.8 on the 50mm as far as depth of field is concerned. This last part of this sentence is very important so I will repeat it again and make it bold: as far as depth of field is concerned.

You can also calculate the depth of field via some complicated equations but fortunately there are expert websites that offer the possibility to do so quickly by inputing basic parameters. The simplest calculator is at Cambridge in Colour and you can see a numerical comparison below with the same focal lengths mentioned before.

Because of the results shown above, many people believe that the fastest aperture indicated on lenses designed for smaller sensors is “misleading”. One year ago, Tony Northrup made a series of popular videos where he explains why all camera companies are “cheating” when it comes to aperture. Given the popularity of Tony’s channel, you can imagine the success (and controversy) these videos attracted. Now I don’t want to go into Tony’s argument here but I’ll take one of his points which is:

- Why does the specs list always include the focal length equivalence but not the aperture equivalence?

For example Panasonic writes that its 25mm f/1.4 has an equivalent focal length of 50mm on 36×24 format but doesn’t include the equivalent aperture of f/2.8 for the same format.

Is there any reason to not make this aperture equivalence official on every specification list? Well, to answer that we need to turn to our second chapter.

Do you remember what I asked you to save at the beginning of the article? Yes, it’s time to talk about the amount of light passing through the aperture.

Aperture and Exposure

With the focal length you can refer to the angle of view, which is a simple unit of measurement. The aperture equivalence is a little more complicated because the f-stop number affects both the depth of field in a real-world shooting situation and your exposure.

The reason you choose a fast lens is not necessarily just for the depth of field. You might want one because it allows you to gather more light. If you often work in low-light conditions, a faster aperture allows you to have a better exposure, keep your ISO down or maintain a faster shutter speed. A quick example: a f/2.8 or f/2 telephoto lens can be more useful than a f/4-5.6 zoom lens when shooting indoors.

You might need a fast wide angle lens for astrophotography: a larger aperture will make it easier to capture all the very distant points of light in the sky.

Below is a different example. With this long exposure shot I wanted to blur the water as much as I could. I used an ND filter, set my ISO to 100 (Low on the E-M1) and the aperture to f/14 so that I could use a 50s shutter speed. Here again the depth of field didn’t cross my mind while setting up the camera, although f/14 rendered the entire image in focus.

So now let’s go back to our 3 lens example. If I say that a 25mm 1.4 on m4/3 is like a 50mm f/2.8 on full-frame, you could also think that the amount of light passing through is involved.

Actually it is a common belief that Micro Four Thirds lenses gather less light than a full frame lens. The latest example is on the well respected and experienced Dpreview site. This is what they state about the Olympus 300mm f/4 Pro:

- Although its F4 maximum aperture is equivalent to F8 on full frame in terms of depth-of-field and light gathering (in total image terms)… From Dpreview closer look article

Dpreview’s statement above is not completely incorrect but it oversimplifies and might easily confuse those who don’t have extensive knowledge of how exposure and image sensors work. The easiest way to explain this is to start by seeing how this applies in real-world shooting.

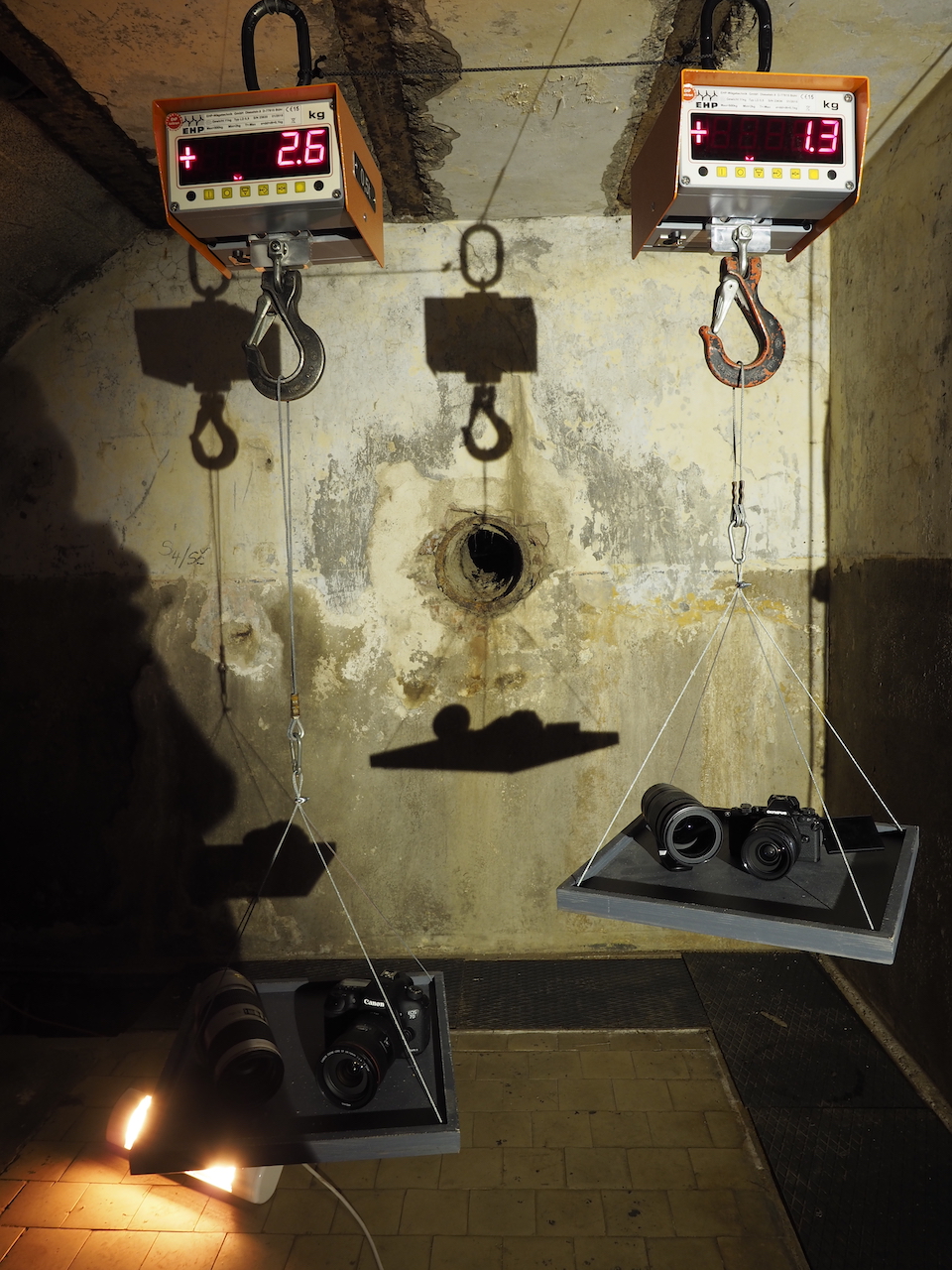

Below you can see three images taken with three lenses I own that are the closest in terms of angle of view. I matched the exposure for the three cameras: f/1.8 aperture, 1/100 shutter speed and 200 ISO:

- Sony 55mm f/1.8 on A7r II (43°)

- Fuji XF 35mm f/1.4 on X-T1 (45°)

- Panasonic 25mm f/1.4 on GX8 (47°)

Are we seeing an overexposed image by two stops from the Sony or an underexposed image from the Micro Four Thirds sensor? Nope.

For reference purposes, here is how the X-T1 and A7r II images would look if I set them to the “equivalent” aperture of f/2.5 and f/3.5 respectively, giving both lenses the same virtual aperture (and therefore the same depth of field) as the m4/3 lens.

Now let’s play a little more. Below I included two additional images. One is the A7r II with the same lens at f/2.8, the second was taken with the Sony RX10 II at f/2.8. The latter has a smaller sensor than Micro Four Thirds (1 inch). See for yourself!

The test above shows the following: if the focus distance, ISO and shutter speed of different camera systems with different sensor sizes and the virtual aperture inside the lenses are the same (so different f-stop numbers), you get the same amount of depth of field but not the same exposure. If the virtual aperture diameter is different (but with same f-stop values), the depth of field produced is different but the exposure is the same.

I can summarise this by referring once again to the calculations shown in the first chapter:

- Same exposure, different DoF:

- 36×24 format: 50/1.4 = 35.7mm

- APS-C format: 35/1.4 = 25mm

- m4/3 format: 25/1.4 = 17.9mm

- Different exposure, same DoF

- 36×24 format: 50/2.8= 17.9mm

- APS-C format: 35/2 = 17.9mm

- m4/3 format: 25/1.4 = 17.9mm

Now you might ask: isn’t a camera/lens combo with a smaller sensor supposed to gather less light than an equivalent combo with a larger sensor? How can the exposure match if with the same f-stop value the aperture inside the full frame lens is twice as large and the sensor is four times larger than the m4/3 camera?

Well, the first answer is that the focal length on the 36×24 camera is double the focal length of the m4/3 camera (50mm vs 25mm) so the full frame camera requires more light to get the same exposure on its larger sensor. That’s why the virtual aperture is larger.

This also helps us explain why we have f-stop numbers in the first place. They were introduced as a unit of measurement to compare the speed of lenses (a.k.a. the amount of light they can transmit). This means that with the same f-stop value, we can expect lenses to give us the same exposure on any camera regardless of the focal length or sensor size.

Note: I am purposely skipping a few things here to make this article as easy as possible to read. If I wanted to be strictly scientific, I should also mention the T stop for example (effective light transmission of a lens where the f-stop value is often but not always a good approximation). For instance, the Pana/Leica 25mm f/1.7 has a T-stop of 1.7 while the Sony 55mm f/1.8 has a T1.8 transmission (based on DXOmark results).

This also tells us that the sensor size itself is not directly responsible for our exposure. Let’s dig a little bit more into this, shall we?

Aperture and Noise Ratio – Or: this is where I learned to stop caring

Let’s have another look at the Dpreview statement seen before (note that I have nothing against Dpreview, it is just one of the most recent examples that came to mind):

- Although its F4 maximum aperture is equivalent to F8 on full frame in terms of depth-of-field and light gathering (in total image terms)…

Now let’s extract the last part of that sentence: “light gathering (in total image terms)” which we can simplify by calling it Total Light. What does Total Light mean exactly? Well, it is the total number of photons (elementary particles of light) that fall onto the sensor and can also be explained with the following equation: Total Light = Exposure x Sensor Area.

What is important to explain here is this: the total light hitting the sensor doesn’t determine your exposure. It is the amount of light passing through the lens that determines it.

The exposure is in fact determined by the following factors:

- Scene luminance: how much light there is in your scene

- F-stop: how large you set your aperture inside the lens

- Shutter speed: how much time you let that light hit the sensor

The light density that enters your lens remains the same because it is determined by how much light there is in your scene in the first place, not by how large your sensor is. Then you decide how much light you want to reach to the sensor by closing or opening the aperture or increasing/decreasing the shutter speed. I repeat, the sensor size itself doesn’t affect your exposure.

You might have also noticed that I didn’t include the ISO settings in the list above. This is because the ISO settings don’t directly affect the amount of light passing through the lens and hitting the sensor. It can be used indirectly to change the exposure (decreasing the ISO and opening the aperture or slowing down the shutter speed) or to increase the brightness of your photograph (for example if there isn’t enough light in a room). It works on an electrical level, not a light level and in fact it increases the brightness of your image (brightness is different from exposure).

Ok, so the exposure remains the same with the same F-stop number, but the Total Light factor must affect something, right?

Yes, of course it does. Because the larger sensor captures more Total Light (Photons) on a larger surface, it will gather more “data” to increase the Dynamic Range and decrease the Noise density (NSR – Noise to Signal Ratio). In other words, your picture will have less noise, look “cleaner” and have more information in the highlights and shadows.

Let’s analyse another comparison below with low-light images shot at ISO 6400 with the three cameras. We can notice that the exposure once again is similar but the A7r II has a cleaner image. That is where the larger sensor helps.

If we decide to compare the same shots with the same amount of noise, we could calculate the following: the 36×24 sensor is 4 times larger than Micro Four Thirds and 2.5 times larger than APS-C. For a sensitivity of 6400 on the full-frame sensor, the equivalent sensitivity would be:

- 6400/4= 1600 ISO on m4/3

- 6400/2.25=2845 ISO on APS-C

So to have the same noise density in my three images above and therefore match the Total Light gathering, I should have taken my GX8 shot at 1600 ISO and my X-T1 shot at 3200 ISO (closest value to 2845). Of course the two shots would have also been underexposed. To return to the same exposure, I would have had to change my shutter speed or adjust my aperture to f/1.4 for the m4/3 camera and f/2 for the X-T1.

Note: I’ll leave out the fake ISO controversy concerning Fujifilm cameras so as not to make this article more complicated.

So after all these analyses, can we finally say that a 25mm f/1.4 on m4/3 “in reality” is a 50mm f/2.8? Well, it does seem to be correct if we consider Depth of Field and Total Light a.k.a. Noise Density. But here lies my personal disagreement.

First, the f-stop value is related to exposure and we’ve seen that exposure is not influenced by the sensor size or even the ISO sensitivity. It is only related to the amount of light passing through the lens. So to say that “25mm f/1.4 = 50mm f/2.8” means we are mistakenly using the f-stop to compare Noise Density as well.

To me, not only does this argument starts to appear a little forced but we also lose perspective of what matters most when you are out shooting. I think the f-stop value is enough to compare depth of field without making everything more complicated.

Second, this quick comparison between lenses and f-stop numbers doesn’t take into account the sensor technology itself. Granted most image sensors nowadays are CMOS. But some of them have BSI technology for example which means they gather more light than standard CMOS chips. Some sensors are even larger than 36×24 but are based on CCD technology which performs worse at high ISOs. I’m not sure how the Noise Density would compare there. The same reasoning can be applied to the Sigma Foveon APS-C sensor: what would be the equivalent ISO knowing that past ISO 400 this sensor produces very noisy images? As you can see, this reasoning is not universally compatible.

Third, it comes down to simple logic. When you are out there shooting, you don’t set your camera according to the Noise Density you will get in comparison to another system.

The same old rule applies to every camera, from your smartphone to your medium format gear: you try to keep your ISO value as low as possible and give your sensor as much light as possible for an optimal exposure.

If you can’t gather enough light with either your aperture or your shutter speed, then you increase the sensitivity. Finally if we come back to the ISO equivalence calculated above, I mentioned that to match the same exposure I could also have changed the shutter speed, which is something that you can do in real life.

Preface to the conclusion: Specs vs. Marketing

What I find interesting is that after this in-depth analysis concerning equivalence between camera systems, we come back to two basic arguments. A smaller sensor will give you a deeper depth of field and more noise with the same aperture or ISO settings in comparison to a larger sensor. This is true for smartphones, compact cameras, bridge cameras, m4/3 cameras, APS-C cameras and 35mm format cameras.

Actually, just recently Phase One announced the first full-frame medium format camera. Which one is the real full-frame then? Should we do all the math again?

I understand that the 35mm format remains a reference because film used to be so popular but today in a digitally dominated era, some of the most popular cameras are smartphones, so more or less the 1/3 inch format. Maybe we should reset everything and start from that (it would be fun for sure!).

You might have noticed that I don’t use the term “crop” for a smaller sensor and I try to avoid the term ”full frame” as much as possible. Of course, they are a popular way to refer to these different sensor formats so I can’t ignore them completely but I feel they are overused. In actually fact, full frame could refer to any sensor format because it also means that the entire sensor surface is used to take a picture. Put that way, a m4/3 sensor is a full frame sensor. “Crop sensor” simply refers to sensors smaller than 36×24 and the fact that there is a crop factor (1.5x for APS-C, 2x for m4/3). It is used because once again “full frame” (35mm format) is the reference.

Perhaps companies could add additional specs for Transmission, Depth of Field, Total Light, Noise Density and implement them into the official spec sheets of their photography products so that you would get “100% transparent” information. However I am not sure that doing so would create less confusion. In fact I can already imagine the increased number of heated discussions on forums and social media groups because there are so many more numbers to compare!

Besides, further mention of all these specs and numbers and how they compare to the full-frame format will only penalise smaller systems that find their virtues elsewhere.

Another thing worth remembering is that companies make products to sell them. A brand will always show the positive side of its products, not the negative. Otherwise it would be marketing suicide. It’s up to reviewers, camera testers, specialised magazines and websites to list the pros and cons. That is true not only for cameras but any product you can find on the market (smartphones, computer, cars, etc).

Do you think that every phone commercial shows you images or video footage taken with that specific product? We could talk about the “world’s fastest AF” quote that we often see in press releases. I remember some banners for the launch of the first A7 and A7r that were plastered with the slogan “Faster than a DSLR”.

Or maybe try to watch various promotional videos from different camera brands. You will often discover that many of them start with an ambassador photographer taking a picture with emotional music in the background and his voice-over saying “I am a photographer and I tell stories with my images“. As much as this sounds banal and repetitive, in the end, it’s what matters.

Olympus, Panasonic, Fujifilm, Sony, Nikon, Canon – they all create boxes that convert light into an electrical signal. Each one has its own set of advantages and disadvantages. It’s up to the photographer to do the rest.

Yes, it’s true that some product specs can appear confusing especially for someone who doesn’t know a lot about gear. But I also think that the additional information can confuse people even more. Should we condemn Olympus or Panasonic because they don’t state the equivalent aperture or Total Light gathering? Or should we focus more on the advantages a smaller system can give you? A Sony RX10 II advertised with a 24-200mm f/2.8 will appear misleading to some but it also describes a compact camera with a fast lens that gives you the same zoom range (and the same exposure) as more expensive and larger cameras. Of course, with the sensor being smaller, you’ll have more noise and less dynamic range.

One last question could be: why don’t these mirrorless brands produce faster lenses to compensate for their so-called “inferiority”?

Now, that is actually a very good question and I would love to ask a proper lens engineer for a more complete answer. But we can definitely list some of the potential causes: faster lenses are more expensive to produce which means they will also be more expensive for the customer. And let’s face it, lots of people complain about price. These lenses would also be larger, heavier and lots of people complain about size and weight. Look at size and weight of the Voigtlander f/0.95 series for m4/3 and remind yourself that they don’t even have OIS or AF, and they aren’t perfectly sharp wide open either.

Conclusion – Or why I love smaller sensors as much as larger sensors

Is there an explanation we have yet to discover that would make everyone agree? I don’t think so because once again it comes down to points of view and what is more important to each individual.

I am sure that this article won’t necessarily make people change their minds about the subject. It’s a topic that will continue to divide people into two groups that disagree.

The first group will consider a good photograph something with the perfect exposure, something that is 100% noise free and has more shallow depth of field. The second group will consider a good photograph something where the author captured the light in the best way he/she could, regardless of the sensor size or “real” aperture used.

I respect everyone’s opinion, and actually some excellent essays written by photographers who would disagree with me completely helped me fill this article with more accurate information. But personally, building this website with Heather and learning to use all these mirrorless cameras has made me choose the second group.

Do I really care if the equivalent aperture here is f/5.6 or equivalent ISO is 800?

One thing that is often missing from these kinds of discussions is that a deeper Depth of Field is not always a negative thing. This is one of the reasons I started using Micro Four Thirds cameras for my event photography two years ago. Shooting at f/2.8 with an equivalent depth of field of f/5.6 can be helpful indeed. A camera system with a smaller sensor offers advantages: this is why I like them and why I learned to not worry about the technical differences.

It’s 2016 and every camera has a good sensor that allows you to do everything you need to do. I’ve said it once and I’ll say it again: when I test a camera, image quality is often the most boring part unless there is something particular to talk about. However I can understand that what’s irrelevant to me might be relevant to you.

If you are curious to read more about equivalence from a purely technical point of view, I invite you to check out this excellent essay written by Joseph James. It’s really long, requires some knowledge but is also very interesting. I’ve also included this excellent YouTube video by John P. Hess of Filmmaker IQ. (Thanks to our readers for bringing it to our attention.) John explains Depth of Field by referring to the Circle of Confusion, which is an extra piece of information worth knowing.

You may also like reading: